“Autonomous Weapons Systems, including the weaponization of artificial intelligence, is a cause for grave ethical concern. Autonomous weapon systems can never be morally responsible subjects. The unique human capacity for moral judgment and ethical decision-making is more than a complex collection of algorithms, and that capacity cannot be reduced to programming a machine, which as ‘intelligent’ as it may be, remains a machine. For this reason, it is imperative to ensure adequate, meaningful and consistent human oversight of weapon systems.” This is what Pope Francis wrote in his Message for the World Day of Peace 2024.

An episode that took place forty years ago should become a paradigm whenever we talk about artificial intelligence applied to war, weapons, and instruments of death.

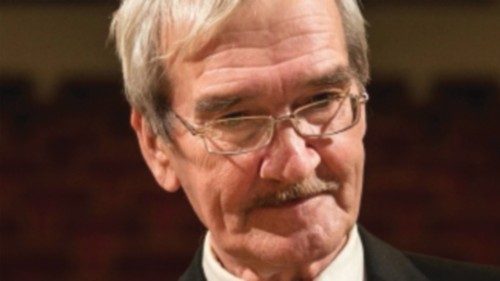

It is the story of a Soviet officer who, thanks to his decision that defied protocols, saved the world from a nuclear conflict that would have had catastrophic consequences. That man was Stanislav Evgrafovich Petrov, a lieutenant colonel in the Russian army.

On the night of September 26, 1983, he was on night duty in the “Serpukhov 15” bunker, monitoring U.S. missile activities. The Cold War was at a crucial turning point, American President Ronald Reagan was investing massive sums in armaments and had just described the ussr as an “evil empire,” while nato was engaged in military exercises simulating nuclear war scenarios.

In the Kremlin, Yuri Andropov had recently spoken of an “unprecedented escalation” of the crisis, and on September 1, the Soviets had shot down a Korean Air Lines commercial airliner over the Kamchatka Peninsula, killing 269 people.

On that night of September 26, Petrov saw that the Oko computer system, the “brain” that was considered infallible in monitoring enemy activity, had detected the launch of a missile from a base in Montana directed at the Soviet Union.

Protocol dictated that the officer immediately notify his superiors, who would then give the green light for a retaliatory missile launch towards the United States. But Petrov hesitated, remembering that any potential attack would likely be massive. He thus considered the solitary missile a false alarm.

He made the same consideration for the next four missiles that appeared shortly after on his monitors, wondering why no confirmation had come from ground radar. He knew that intercontinental missiles took less than half an hour to reach their destination, but he decided not to raise the alarm, stunning the other military personnel present.

In reality, the “electronic brain” was wrong; there had been no missile attack. Oko had been misled by a phenomenon of sunlight refraction in contact with high-altitude clouds.

In short, human intelligence had seen beyond that of the machine. The providential decision not to take action had been made by a man, whose judgment was able to look beyond the data and protocols.

Nuclear catastrophe was averted, even though no one came to know about the incident until the early 1990s. Petrov, who passed away in September 2017, commented on that night in the “Serpukhov 15” bunker: “What did I do? Nothing special, just my job. I was the right man in the right place at the right time.”

He was a man who was able to evaluate the potential error of the supposedly infallible machine, the man capable — to echo the Pope’s words — “of moral judgment and ethical decision-making,” because a machine, no matter how “intelligent,” remains a machine.

War, Pope Francis repeats, is madness, a defeat for humanity. War is a serious violation of human dignity.

Waging war while hiding behind algorithms, relying on artificial intelligence to determine targets and how to hit them, thus relieving one’s conscience because it was the machine that made the decision, is even more serious. Let us not forget Stanislav Evgrafovich Petrov.

By Andrea Tornielli

Purchase the Encyclical here Fratelli Tutti

Purchase the Encyclical here Fratelli Tutti